Since it exploded onto the scene in January after a newspaper exposé, Clearview AI quickly became one of the most elusive, secretive and reviled companies in the tech startup scene.

The controversial facial recognition startup allows its law enforcement users to take a picture of a person, upload it and match it against its alleged database of 3 billion images, which the company scraped from public social media profiles.

But for a time, a misconfigured server exposed the company’s internal files, apps and source code for anyone on the internet to find.

Mossab Hussein, chief security officer at Dubai-based cybersecurity firm SpiderSilk, found the repository storing Clearview’s source code. Although the repository was protected with a password, a misconfigured setting allowed anyone to register as a new user to log in to the system storing the code.

The repository contained Clearview’s source code, which could be used to compile and run the apps from scratch. The repository also stored some of the company’s secret keys and credentials, which granted access to Clearview’s cloud storage buckets. Inside those buckets, Clearview stored copies of its finished Windows, Mac and Android apps, as well as its iOS app, which Apple recently blocked for violating its rules. The storage buckets also contained early, pre-release developer app versions that are typically only for testing, Hussein said.

The repository also exposed Clearview’s Slack tokens, according to Hussein, which, if used, could have allowed password-less access to the company’s private messages and communications.

Clearview has been dogged by privacy concerns since it was forced out of stealth following a profile in The New York Times, but its technology has gone largely untested and the accuracy of its facial recognition tech unproven. Clearview claims it only allows law enforcement to use its technology, but reports show that the startup courted users from private businesses like Macy’s, Walmart and the NBA. But this latest security lapse is likely to invite greater scrutiny of the company’s security and privacy practices.

[…]

Ton-That accused the research firm of extortion, but emails between Clearview and SpiderSilk paint a different picture.

Hussein, who has previously reported security issues at several startups, including MoviePass, Remine and Blind, said he reported the exposure to Clearview but declined to accept a bounty, which he said if signed would have barred him from publicly disclosing the security lapse.

It’s not uncommon for companies to use bug bounty terms and conditions or non-disclosure agreements to prevent the disclosure of security lapses once they are fixed. But experts told TechCrunch that researchers are not obligated to accept a bounty or agree to disclosure rules.

Ton-That said that Clearview has “done a full forensic audit of the host to confirm no other unauthorized access occurred.” He also confirmed that the secret keys have been changed and no longer work.

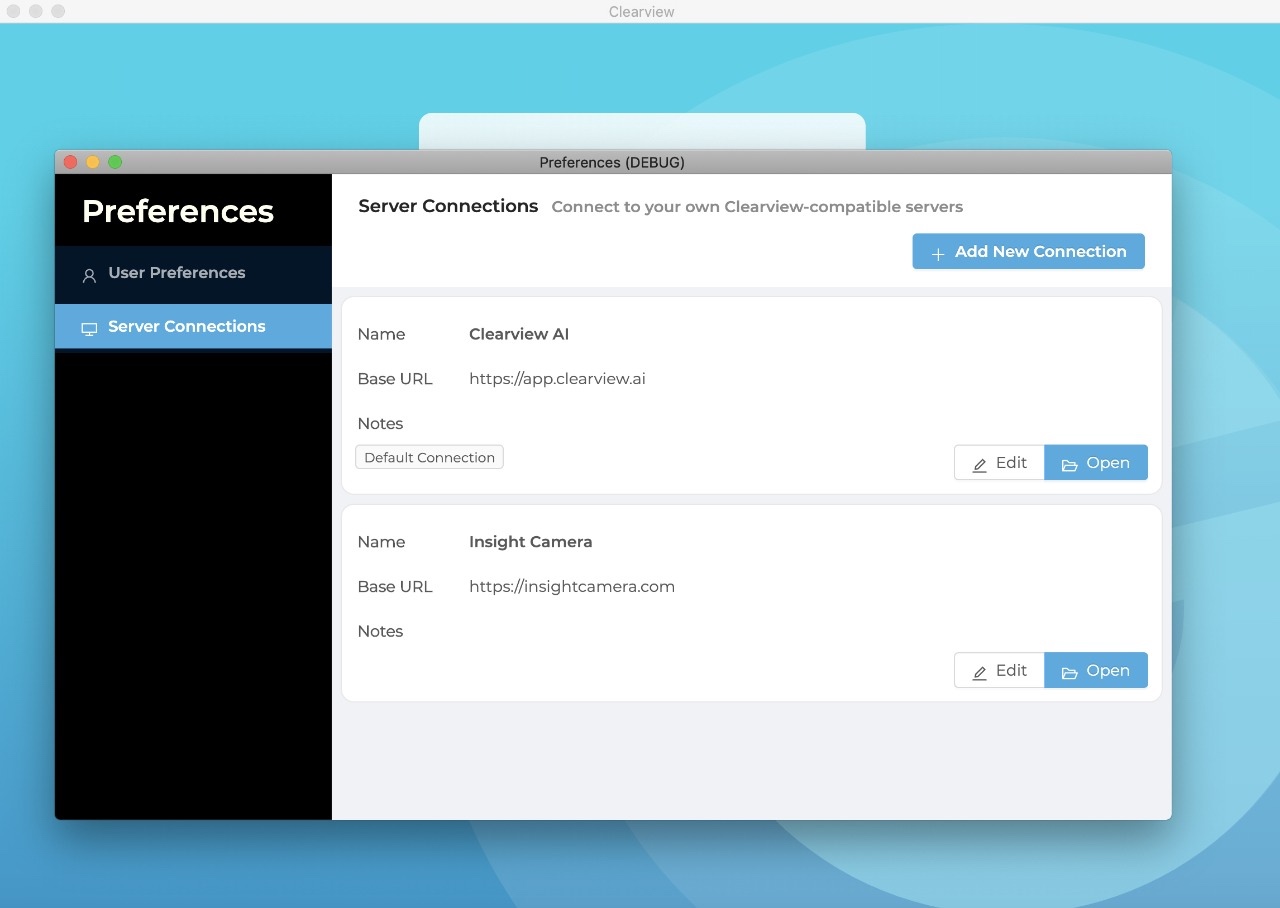

Hussein’s findings offer a rare glimpse into the operations of the secretive company. One screenshot shared by Hussein showed code and apps referencing the company’s Insight Camera, which Ton-That described as a “prototype” camera, since discontinued.

A screenshot of Clearview AI’s app for macOS. It connects to Clearview’s database through an API. The app also references Clearview’s former prototype camera hardware, Insight Camera.

According to BuzzFeed News, one of the firms that tested the cameras is New York City real estate firm Rudin Management, which trialed use of a camera at two of its city residential buildings.

Hussein said that he found some 70,000 videos in one of Clearview’s cloud storage buckets, taken from a camera installed at face-height in the lobby of a residential building. The videos show residents entering and leaving the building.

Source: Security lapse exposed Clearview AI source code | TechCrunch

Robin Edgar

Organisational Structures | Technology and Science | Military, IT and Lifestyle consultancy | Social, Broadcast & Cross Media | Flying aircraft