NVIDIA Research has invented a way to use AI to dramatically reduce video call bandwidth while simultaneously improving quality.

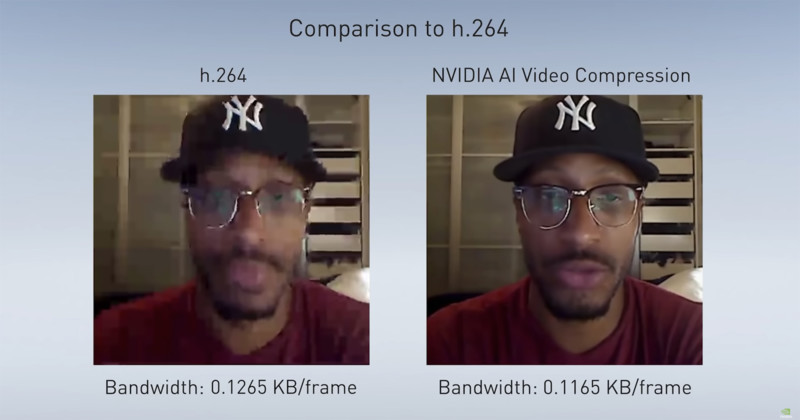

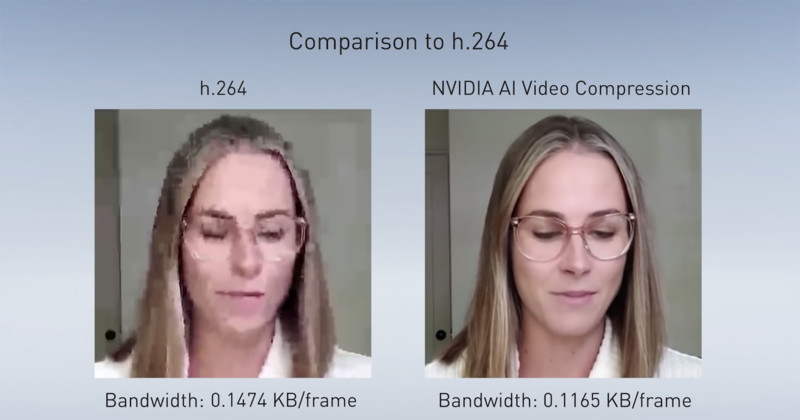

What the researchers have achieved has remarkable results: by replacing the traditional h.264 video codec with a neural network, they have managed to reduce the required bandwidth for a video call by an order of magnitude. In one example, the required data rate fell from 97.28 KB/frame to a measly 0.1165 KB/frame – a reduction to 0.1% of required bandwidth.

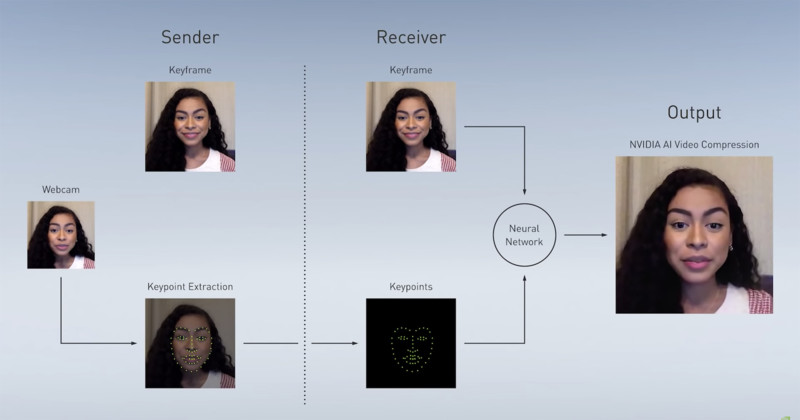

The mechanism behind AI-assisted video conferencing is breathtakingly simple. The technology works by replacing traditional full video frames with neural data. Typically, video calls work by sending h.264 encoded frames to the recipient, and those frames are extremely data-heavy. With AI-assisted video calls, first, the sender sends a reference image of the caller. Then, instead of sending a stream of pixel-packed images, it sends specific reference points on the image around the eyes, nose, and mouth.

A generative adversarial network (or GAN, a type of neural network) on the receiver side then uses the reference image combined with the keypoints to reconstruct subsequent images. Because the keypoints are so much smaller than full pixel images, much less data is sent and therefore an internet connection can be much slower but still provide a clear and functional video chat.

In the researchers’ initial example, they show that a fast internet connection results in pretty much the same quality of stream using both the traditional method and the new neural network method. But what’s most impressive is their subsequent examples, where internet speeds show a considerable degradation of quality using the traditional method, while the neural network is able to produce extremely clear and artifact-free video feeds.

The neural network can work even when the subject is wearing a mask, glasses, headphones, or a hat.

With this technology, more people can enjoy a greater number of features all while using monumentally less data.

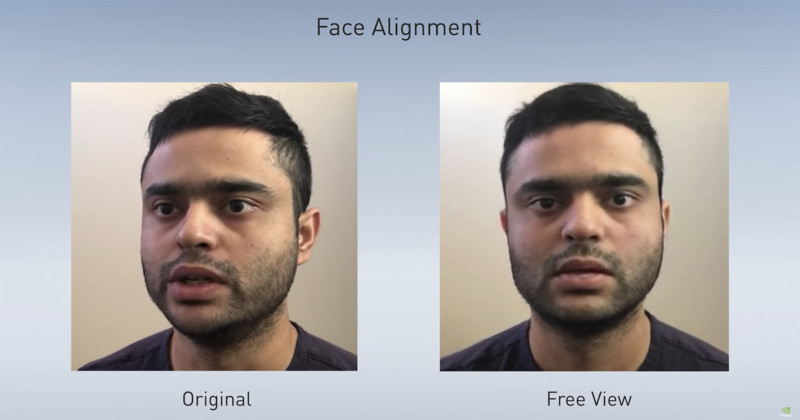

But the technology use cases don’t stop there: because the neural network is using reference data instead of the full stream, the technology will allow someone to even change the camera angle to appear like they are looking directly at the screen even if they are not. Called “Free View,” this would allow someone who has a separate camera off-screen to seemingly keep eye contact with those on a video call.

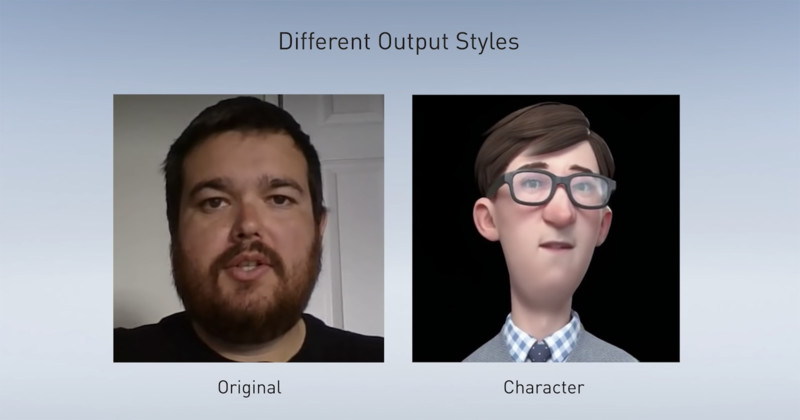

NVIDIA can also use this same method for character animations. Using different keypoints from the original feed, they can add clothing, hair, or even animate video game characters.

Using this kind of neural network will have huge implications for the modern workforce that will not only serve to relieve strain on networks, but also give users more freedom when working remotely. However, because of the way this technology works, there will almost certainly be questions on how it can be deployed and lead to possible issues with “deep fakes” that become more believable and harder to detect.

Source: NVIDIA Uses AI to Slash Bandwidth on Video Calls

Robin Edgar

Organisational Structures | Technology and Science | Military, IT and Lifestyle consultancy | Social, Broadcast & Cross Media | Flying aircraft