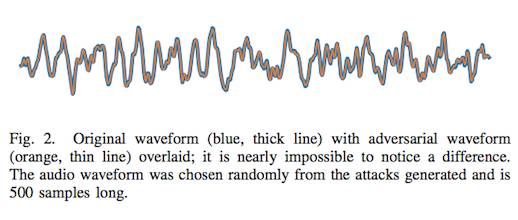

a paper by Nicholas Carlini and David Wagner of the University of California Berkeley has explained off a technique to trick speech recognition by changing the source waveform by 0.1 per cent.

The pair wrote at arXiv that their attack achieved a first: not merely an attack that made a speech recognition SR engine fail, but one that returned a result chosen by the attacker.In other words, because the attack waveform is 99.9 per cent identical to the original, a human wouldn’t notice what’s wrong with a recording of “it was the best of times, it was the worst of times”, but an AI could be tricked into transcribing it as something else entirely: the authors say it could produce “it is a truth universally acknowledged that a single” from a slightly-altered sample.

It works every single time: the pair claimed a 100 per cent success rate for their attack, and frighteningly, an attacker can even hide a target waveform in what (to the observer) appears to be silence.

Source: Boffins tweak audio by 0.1% to fool speech recognition engines • The Register

Robin Edgar

Organisational Structures | Technology and Science | Military, IT and Lifestyle consultancy | Social, Broadcast & Cross Media | Flying aircraft