Boffins at two US universities have found that muting popular native video-conferencing apps fails to disable device microphones – and that these apps have the ability to access audio data when muted, or actually do so.

The research is described in a paper titled, “Are You Really Muted?: A Privacy Analysis of Mute Buttons in Video Conferencing Apps,” [PDF] by Yucheng Yang (University of Wisconsin-Madison), Jack West (Loyola University Chicago), George K. Thiruvathukal (Loyola University Chicago), Neil Klingensmith (Loyola University Chicago), and Kassem Fawaz (University of Wisconsin-Madison).

The paper is scheduled to be presented at the Privacy Enhancing Technologies Symposium in July.

[…]

Among the apps studied – Zoom (Enterprise), Slack, Microsoft Teams/Skype, Cisco Webex, Google Meet, BlueJeans, WhereBy, GoToMeeting, Jitsi Meet, and Discord – most presented only limited or theoretical privacy concerns.

The researchers found that all of these apps had the ability to capture audio when the mic is muted but most did not take advantage of this capability. One, however, was found to be taking measurements from audio signals even when the mic was supposedly off.

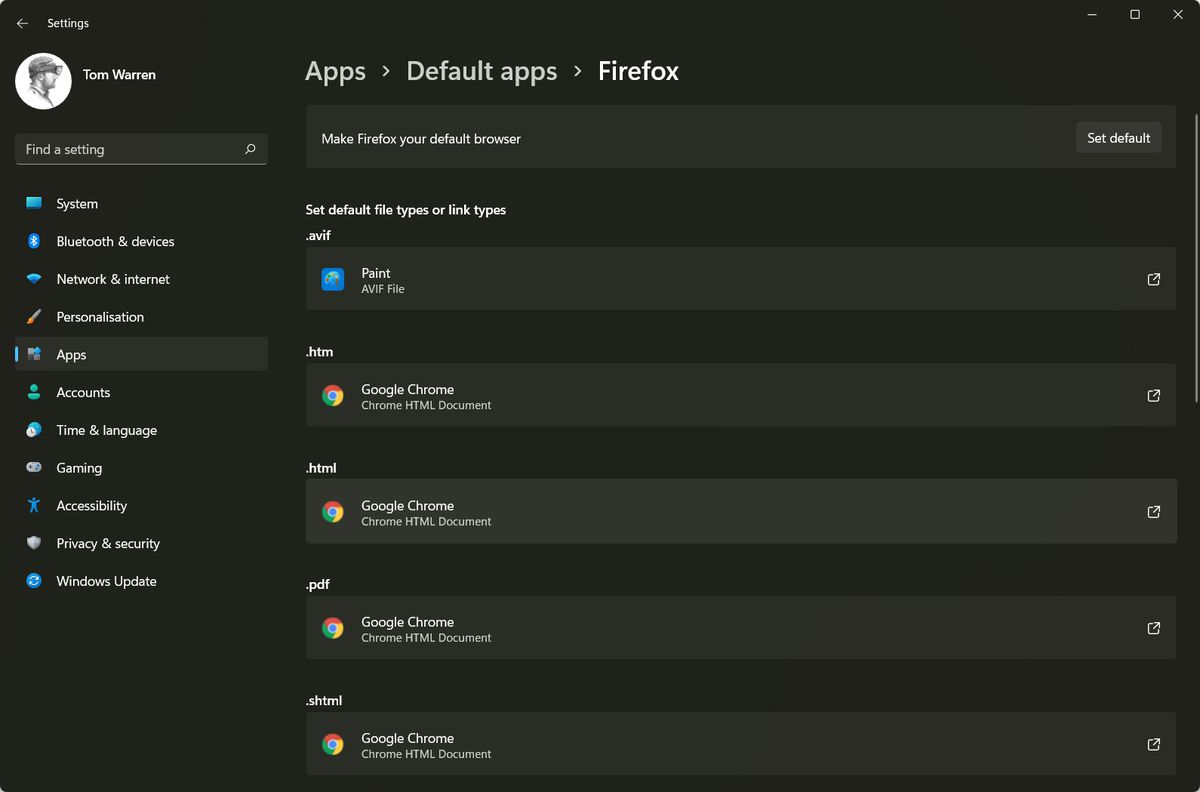

“We discovered that all of the apps in our study could actively query (i.e., retrieve raw audio) the microphone when the user is muted,” the paper says. “Interestingly, in both Windows and macOS, we found that Cisco Webex queries the microphone regardless of the status of the mute button.”

They found that Webex, every minute or so, sends network packets “containing audio-derived telemetry data to its servers, even when the microphone was muted.”

[…]

Worse still from a security standpoint, while other apps encrypted their outgoing data stream before sending it to the operating system’s socket interface, Webex did not.

“Only in Webex were we able to intercept plaintext immediately before it is passed to the Windows network socket API,” the paper says, noting that the app’s monitoring behavior is inconsistent with the Webex privacy policy.

The app’s privacy policy states Cisco Webex Meetings does not “monitor or interfere with you your [sic] meeting traffic or content.”

[…]

Source: Cisco’s Webex phoned home audio telemetry even when muted • The Register