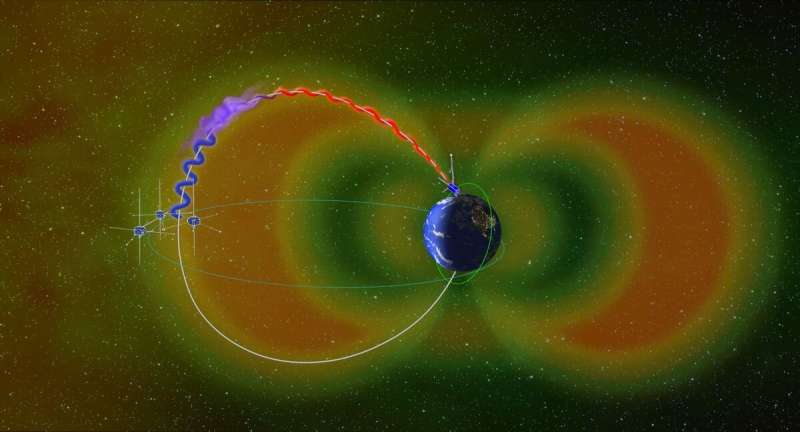

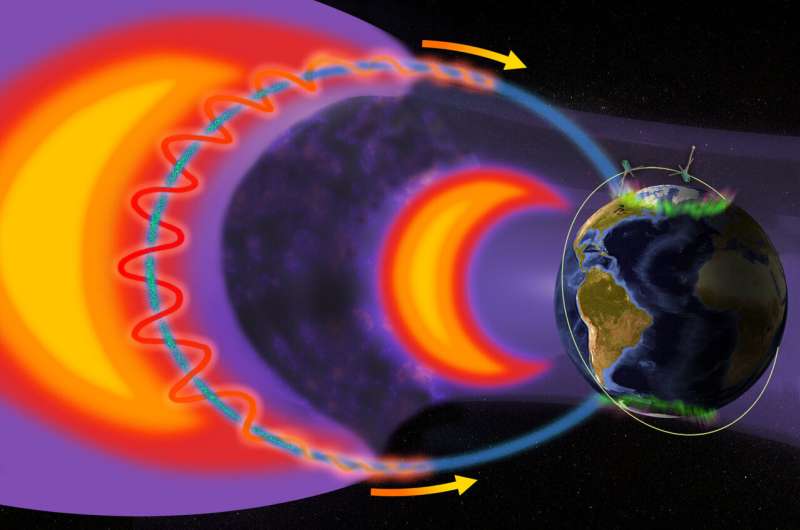

A newly discovered data wiper malware that wipes routers and modems has been deployed in the cyberattack that targeted the KA-SAT satellite broadband service to wipe SATCOM modems on February 24, affecting thousands in Ukraine and tens of thousands more across Europe.

The malware, dubbed AcidRain by researchers at SentinelOne, is designed to brute-force device file names and wipe every file it can find, making it easy to redeploy in future attacks.

SentinelOne says this might hint at the attackers’ lack of familiarity with the targeted devices’ filesystem and firmware or their intent to develop a reusable tool.

AcidRain was first spotted on March 15 after its upload onto the VirusTotal malware analysis platform from an IP address in Italy as a 32-bit MIPS ELF binary using the “ukrop” filename.

Once deployed, it goes through the compromised router or modem’s entire filesystem. It also wipes flash memory, SD/MMC cards, and any virtual block devices it can find, using all possible device identifiers.

“The binary performs an in-depth wipe of the filesystem and various known storage device files. If the code is running as root, AcidRain performs an initial recursive overwrite and delete of non-standard files in the filesystem,” SentinelOne threat researchers Juan Andres Guerrero-Saade and Max van Amerongen explained.

To destroy data on compromised devices, the wiper overwrites file contents with up to 0x40000 bytes of data or uses MEMGETINFO, MEMUNLOCK, MEMERASE, and MEMWRITEOOB input/output control (IOCTL) system calls.

After AcidRain’s data wiping processes are completed, the malware reboots the device, rendering it unusable.

Used to wipe satellite communication modems in Ukraine

[…]

This directly contradicts a Viasat incident report on the KA-SAT incident saying it found “no evidence of any compromise or tampering with Viasat modem software or firmware images and no evidence of any supply-chain interference.”

However, Viasat confirmed SentinelOne’s hypothesis, saying the data destroying malware was deployed on modems using “legitimate management” commands.

[…]

The fact that Viasat shipped almost 30,000 modems since the February 2022 attack to bring customers back online and continues to even more to expedite service restoration also hints that SentinelOne’s supply-chain attack theory holds water.

[…]

Seventh data wiper deployed against Ukraine this year

AcidRain is the seventh data wiper malware deployed in attacks against Ukraine, with six others having been used to target the country since the start of the year.

The Computer Emergency Response Team of Ukraine recently reported that a data wiper it tracks as DoubleZero has been deployed in attacks targeting Ukrainian enterprises.

One day before the Russian invasion of Ukraine started, ESET spotted a data-wiping malware now known as HermeticWiper, that was used against organizations in Ukraine together with ransomware decoys.

The day Russia invaded Ukraine, they also discovered a data wiper dubbed IsaacWiper and a new worm named HermeticWizard used to drop HermeticWiper payloads.

ESET also spotted a fourth data-destroying malware strain they dubbed CaddyWiper, a wiper that deletes user data and partition information from attached drivers and also wipes data across Windows domains it’s deployed on.

A fifth wiper malware, tracked as WhisperKill, was spotted by Ukraine’s State Service for Communications and Information Protection (CIP), who said it reused 80% of the Encrpt3d Ransomware’s code (also known as WhiteBlackCrypt Ransomware).

In mid-January, Microsoft found a sixth wiper now tracked as WhisperGate, used in data-wiping attacks against Ukraine, disguised as ransomware.

[…]