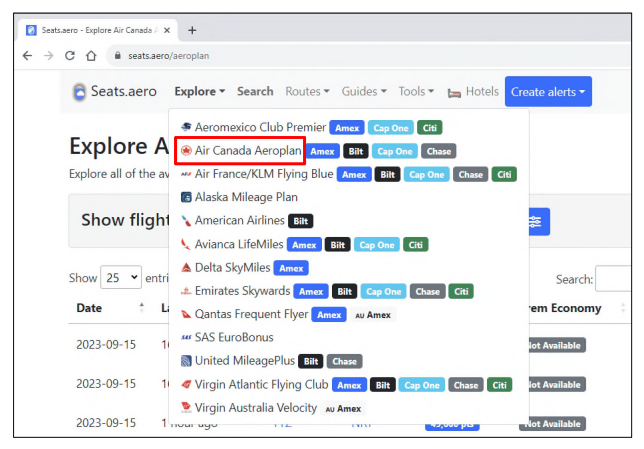

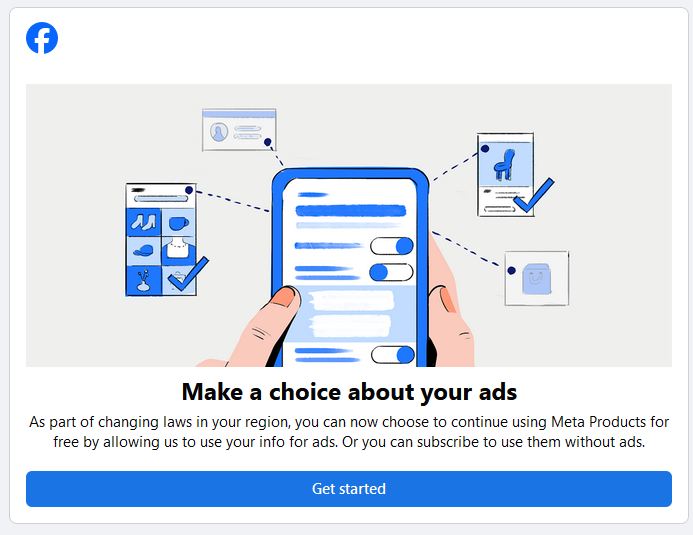

In the EU, Meta has given you a warning saying that you need to choose for an expensive ad free version or continue using targetted adverts. Strangely, considering Meta makes it’s profits by selling your information, you don’t get the option to be paid a cut of the profits they gain by selling your information. Even more strangely, not many people are covering it. Below is a pretty good writeup of the situation, but what is not clear is whether by agreeing to the free version, things continue as they are, or are you signing up for additional invasions into your privacy, such as sending your information to servers into the USA.

Even though it’s a seriously and strangely underreported phenomenon, people are leaving Meta for fear (justly or unjustly) of further intrusions into their privacy by the slurping behemoth.

Why is Meta launching an ad-free plan for Instagram and Facebook?

After receiving major backlash from the European Union in January 2023, resulting in a €377 million fine for the tech giant, Meta has since adapted their applications to suit EU regulations. These major adaptions have all led to the recent launch of their ad-free subscription service.

This most recent announcement comes to keep in line with the European Union’s Digital Marketers Act legislation. The legislation requires companies to give users the option to give consent before being tracked for advertising reasons, something Meta previously wasn’t doing.

As a way of complying with this rule while also sustaining its ad-supported business model, Meta is now releasing an ad-free subscription service for users who don’t want targeted ads showing up on their Instagram and Facebook feeds while also putting some more cash in the company’s pocket.

How much will the ad-free plan cost on Instagram and Facebook?

Austin Distel on UnsplashThe price depends on where you purchase the subscription. If you purchase the ad-free plan from Meta for your desktop, then the plan will cost €9.99/month. If you purchase on your Android or IOS device, the plan will cost €12.99/month. Presumably, this is because Apple and Google charge fees, and Meta is passing those fees along to the user instead of taking a hit on its profit.

If I buy the plan on desktop, will the subscription carry over to my phone?

Yes! It’s confusing at first, but no matter where you sign up for your subscription, it will automatically link to all your meta accounts, allowing you to view ad-free content on every device. Essentially, if you have access to a desktop and are interested in signing up for the ad-free plan, you’re better off signing up there, as you’ll save some money.

When will the ad-free plan be available to Instagram and Facebook users?

The subscription will be available for users in November 2023. Meta didn’t announce a specific date.

“In November, we will be offering people who use Facebook or Instagram and reside in these regions the choice to continue using these personalised services for free with ads, or subscribe to stop seeing ads.”

Can I still use Instagram and Facebook without subscribing to Meta’s ad-free plan?

Meta’s statement said that it believes “in an ad-supported internet, which gives people access to personalized products and services regardless of their economic status.” Staying true to its beliefs, Meta will still allow users to use its services for free with ads.

However, it’s important to note that Meta mentioned in its statement, “Beginning March 1, 2024, an additional fee of €6/month on the web and €8/month on iOS and Android will apply for each additional account listed in a user’s Account Center.” So, for now, the subscription will cover accounts on all platforms, but the cost will rise in the future for users with more than one account

Which countries will get the new. ad-free subscription option?

The below countries can access Meta’s new subscription:

Austria, Belgium, Bulgaria, Croatia, Republic of Cyprus, Czech Republic, Denmark, Estonia, Finland, France, Germany, Greece, Hungary, Iceland, Ireland, Italy, Latvia, Lichtenstein, Lithuania, Luxembourg, Malta, Norway, Netherlands, Poland, Portugal, Romania, Slovakia, Slovenia, Spain, Switzerland and Sweden.

Will Meta launch this ad-free plan outside the EU and Switzerland?

It’s unknown at the moment whether Meta plans to expand this service into any other regions. Currently, the only regions able to subscribe to an ad-free plan are those listed above, but if it’s successful in those countries, it’s possible that Meta could roll it out in other regions.

What’s the difference between Meta Verified and this ad-free plan?

Launched in early 2023, Meta Verified allows Facebook and Instagram users to pay for a blue tick mark next to their name. Yes, the same tick mark most celebrities with major followings typically have. This subscription service was launched as a way for users to protect their accounts and promote their businesses. Meta Verified costs $14.99/month (€14/month). It gives users the blue tick mark and provides extra account support and protection from impersonators.

While Meta Verified offers several unique account privacy features for users, it doesn’t offer an ad-free subscription. Currently, those subscribed to Meta Verified must also pay for an ad-free account if they live in one of the supported countries.

How can I sign up for Meta’s ad-free plan for Instagram and Facebook?

Users can sign up for the ad-free subscription via their Facebook or Instagram accounts. Here’s what you need to sign up:

- Go to account settings on Facebook or Instagram.

- Click subscribe on the ad-free plan under the subscriptions tab (once it’s available).

If I choose not to subscribe, will I receive more ads than I do now?

Meta says that nothing will change about your current account if you choose to keep your account as is, meaning you don’t subscribe to the ad-free plan. In other words, you’ll see exactly the same amount of ads you’ve always seen.

How will this affect other social media platforms?

Paid subscriptions seem to be the trend among many social media platforms in the past couple of years. Snapchat hopped onto the trend early in the Summer of 2022 when they released Snapchat+, which allows premium users to pay $4/month to see where they rank on their friends’ best friends list, boost their stories, pin friends as their top best friends, and further customize their settings.

More notably, Twitter, famously bought by Elon Musk, who now rebranded the platform to “X,” released three different tiers of subscriptions meant to improve a user’s experience. The tiers include Basic, Premium, and Premium Plus. X’s latest release, the Premium+ tier, allows users to pay $16/month for an ad-free experience and the ability to edit or undo their posts.

Pocket-lintOther major apps, such as TikTok, have yet to announce any ad-free subscription plans, although it wouldn’t be shocking if they followed suit.

For Meta’s part, it claims to want its websites to remain a free ad-based revenue domain, but we’ll see how long that lasts, especially if its first two subscription offerings succeed.

This is the spin Facebook itself gives on the story: Facebook and Instagram to Offer Subscription for No Ads in Europe

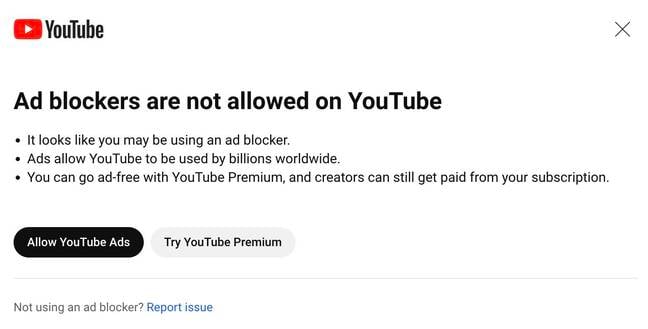

What else is noteworthy, is that this comes as Youtube is installing spyware onto your computer to figure out if you are running an adblocker – also something not receiving enough attention.

See also: Privacy advocate challenges YouTube’s ad blocking detection (which isn’t spyware)

and YouTube cares less for your privacy than its revenues

Time to switch to alternatives!