In 2009, Google started recording the number of content removal requests it received from courts and government agencies all over the world, disclosing the figures on a six-month basis. Soon after, several other companies followed suit, including Twitter, Facebook, Microsoft, and Wikimedia.

This year, we’ve extended our study of the above to include Pinterest, Dropbox, Reddit, LinkedIn, TikTok, and Tumblr. Our study looks at the number of content removal requests by platform, which countries have the highest rates of content removal per 100,000 internet users, and how things have changed on a year-by-year basis.

What did we find?

Some governments avidly try to control online data, whether this is on social media, blogs, or both. And not all of the worst offenders may be who you expect.

Top 10 countries by number of content removal requests

According to our findings, the countries with the highest rate of content removal requests per 100,000 internet users are:

- Monaco – 341 content removal requests per 100,000 internet users

- Russia – 146 content removal requests per 100,000 internet users

- Turkey – 138 content removal requests per 100,000 internet users

- France – 97 content removal requests per 100,000 internet users

- Israel – 91 content removal requests per 100,000 internet users

- Liechtenstein – 68 content removal requests per 100,000 internet users

- Pakistan – 62 content removal requests per 100,000 internet users

- South Korea – 49 content removal requests per 100,000 internet users

- Mexico – 49 content removal requests per 100,000 internet users

- Japan – 49 content removal requests per 100,000 internet users

With 130 content removal requests to less than 39,000 internet users, Monaco has had the most content removal requests per 100,000 internet users. The majority of these (116) were directed at Facebook with over 98 percent in 2019.

In second and third place are Russia and Turkey with 146 and 138 content removal requests per 100,000 internet users respectively. Russia had 179,013 requests in total with 69 percent of these being directed toward Google. In contrast, Turkey had 90,696 requests in total with the majority of these (55 percent) being directed toward Twitter.

We’ll delve into the whats and whys of these removals below. But which countries submitted the most requests overall?

If we switch the top 10 to be the countries that submitted the highest number of requests overall, things do change slightly:

- Russia – 179,013 content removal requests submitted in total. The majority of these (69 percent) were directed toward Google

- India – 97,631 content removal requests submitted in total. The majority of these (76 percent) were directed toward Facebook

- Turkey – 90,696 content removal requests submitted in total. The majority of these (55 percent) were directed toward Twitter

- Japan – 56,861 content removal requests submitted in total. The majority of these (98 percent) were directed toward Twitter

- France – 54,627 content removal requests submitted in total. The majority of these (80 percent) were directed toward Facebook

- Mexico – 45,671 content removal requests submitted in total. The majority of these (99 percent) were directed toward Facebook

- Brazil – 36,151 content removal requests submitted in total. The majority of these (72 percent) were directed toward Facebook

- South Korea – 24,658 content removal requests submitted in total. The majority of these (44 percent) were directed toward Twitter

- Pakistan – 23,377 content removal requests submitted in total. The majority of these (84 percent) were directed toward Facebook

- Germany – 19,040 content removal requests submitted in total. The majority of these (68 percent) were directed toward Facebook

Russia outranked all other countries with a 6-digit figure for government content requests, making 179,765 requests across all platforms. It’s also the highest-ranking country for the number of requests submitted to Google, Reddit, TikTok, and Dropbox.

Interesting, too, is how the United Kingdom and the United States rank in eleventh and twelfth place respectively for the number of content requests submitted. The UK had 17,406 content removal requests in total with 64 percent being submitted to Facebook. Meanwhile, the US had 12,474 in total with 80 percent submitted to Google. In relation to the number of internet users, however, the UK submitted 27 per 100,000 and the US just 4 per 100,000. This places them 16th and 50th in the number of requests per 100,000 internet user rankings respectively.

Highest content removal requests by platform

Now we know which countries have submitted the most requests, which country comes out on top for each platform?

- Google: Russia accounts for 60 percent of requests – 123,607 of 207,066

- Facebook: India accounts for 24 percent of requests – 74,674 of 308,434

- Twitter: Japan accounts for 31 percent of requests – 55,590 of 181,689

- Microsoft: China accounts for 52 percent of requests – 8,665 of 16,817

- Pinterest: South Korea accounts for 46 percent of requests – 2,345 of 5,134

- Tumblr: South Korea accounts for 71 percent of requests – 2,260 of 3,193

- Wikimedia: United States accounts for 23 percent of requests – 977 of 4,256

- Dropbox: Russia accounts for 34 percent of requests – 752 of 2,217

- TikTok: Russia accounts for 24 percent of requests – 150 of 620

- Reddit: Russia accounts for 29 percent of requests – 143 of 488

- LinkedIn: China accounts for 71 percent of requests – 72 of 102

What about China’s lower rankings across every category but Microsoft?

China tends not to bother going through content providers and their in-house reporting mechanisms to censor content. It simply blocks entire sites and apps outright, forcing internet service providers to bar access on behalf of the government. China has banned all of the websites we have used in this comparison, except for LinkedIn and some of Microsoft’s services–the two areas where it dominates the content removal requests.

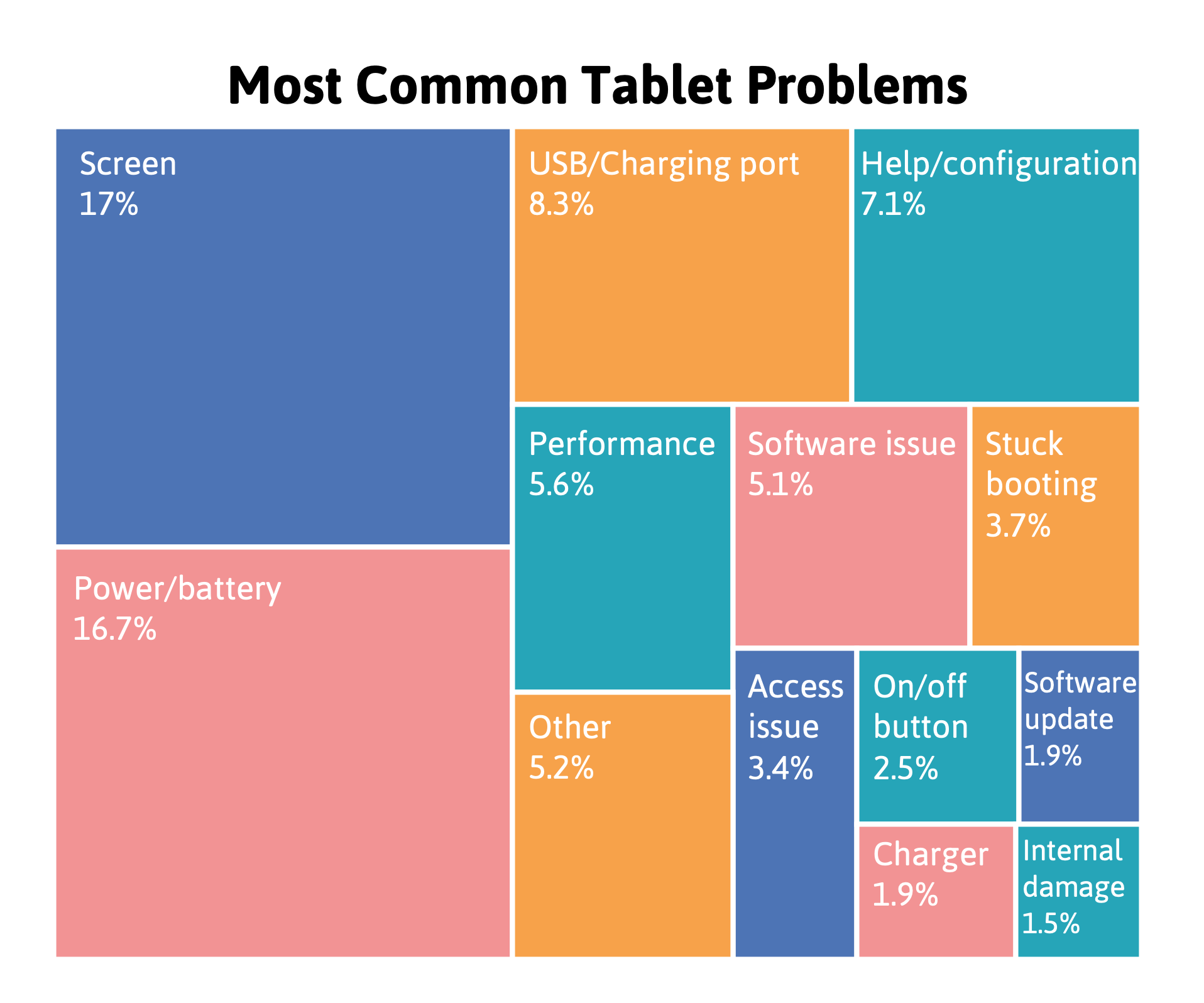

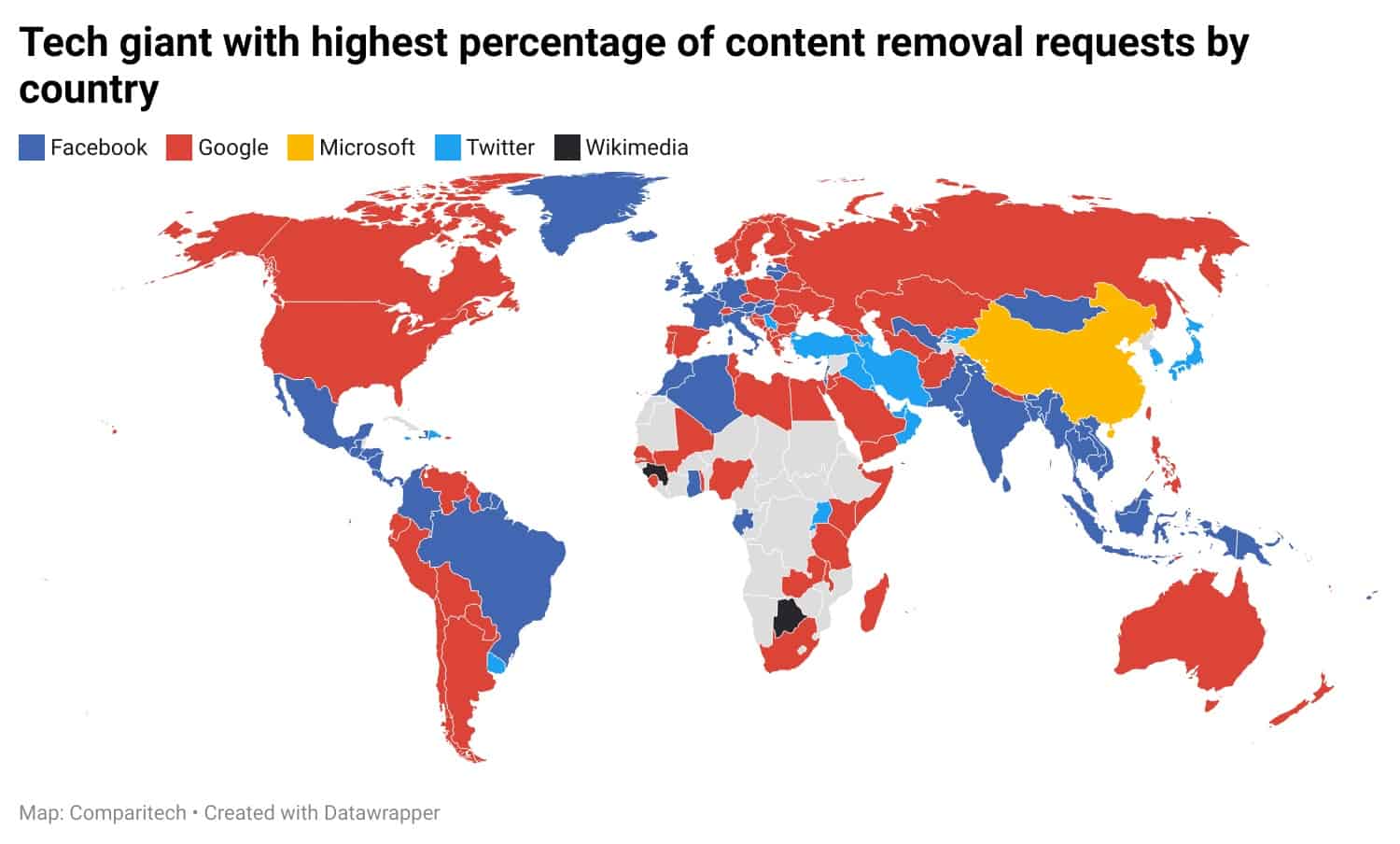

Which tech giant is receiving the highest percentage of removal requests in each country?

If we look at which tech giant is receiving the highest percentage of removal requests in each country, we can see that Google and Facebook tend to receive the vast majority.

Many Central European, South East Asian, and some South American countries submit the majority of their removal requests to Facebook, while many African and Eastern European countries, as well as the US, Canada, and Australia, submit most of theirs to Google. A large number of Middle Eastern countries submit the majority of requests to Twitter.

Biggest years for government content removal requests

Following a slight dip in 2019 (a 2 percent decrease on the number of requests submitted in 2018), removal requests bounced back up by 69 percent from 2019 to 2020. Twitter accounted for the largest percentage of these requests with 80,744 (40 percent) of the 203,698 requests submitted in total. It was closely followed by Facebook (62,314 or 31 percent) and Google (44,065 or 22 percent).

Over the years, these platforms have made the most content removal requests. But, when you take into consideration that all three are the highest used of all the platforms we’ve covered, that’s perhaps no surprise.

However, what the above does show us is how the focus on platforms has changed over the years.

Facebook’s biggest year for content removal requests came in 2015 when 76,395 requests were submitted (25 percent of its overall total). These requests then dropped significantly in 2016 before increasing by 155 and 21 percent from 2016 to 2017 and 2017 to 2018 respectively. Figures then dropped by 34 percent from 2018 to 2019 before almost doubling again from 2019 to 2020.

Google also witnessed a similar drop in 2019 when requests dipped by 30 percent, having been growing by around 10,000 each year from 2016 to 2018. In 2020, the number of requests rose again by 46 percent.

Twitter, however, didn’t follow this trend. In 2019, Twitter saw a 97 percent increase in the number of requests submitted (rising from 23,464 in 2018 to 46,291 in 2019). The number of content removals submitted to Twitter continued to rise significantly in 2020, too, when they nearly doubled to 80,744. In fact, of all the platforms we’ve studied, Twitter is the only platform (bar LinkedIn and Reddit which have only recently begun to submit reports) that has noticed an increase in content removal requests each and every year.

Why does Twitter appear to be dominating content removal requests? After all, it doesn’t have the largest number of users (it has around 396.5m users compared to Facebook’s 2.8bn).

The majority of the increase comes from Japan, India, South Korea, and Indonesia. As we’ll see further on, Japan Twitter has recently been under fire for censoring government critics. Other reasons could be increases in scams, misinformation around elections, and general violations of local laws.

Russia accounts for 60 percent of Google’s content removal requests

As mentioned previously, Russia dominates the number of content request removals made to Google, accounting for 123,607 (60 percent) in total. Despite Russia’s requests dropping from over 30,000 in 2018 to just under 20,000 in 2019, they jumped back up to a record-breaking 31,384 in 2020. This dip in 2019 was a worldwide trend, however, with a 30 percent decrease in removal requests in 2019 followed by a 46 percent increase in 2020.

Nearly 34 percent of Russia’s requests come under the reason of national security, closely followed by copyright (26 percent) and regulated goods and services (18 percent).

Russia’s requests are significantly higher than second-place Turkey, which sent just 14,242 requests–7 percent of all requests received. Turkey was closely followed by India (10,138 with 4.89 percent) and the US (9,933 with 4.79 percent). Defamation is the main reason for all of these countries’ requests, accounting for 39 percent of Turkey’s total, 27 percent of India’s total, and 58 percent of the US’s total.

Which of Google’s products are being targeted by these removal requests?

YouTube and web searches are all prime targets for these removal requests. Of all the requests, 50 percent are directed toward YouTube and 30 percent toward web searches.

Examples of Google content removal requests

Some examples of the requests submitted by Russia, Turkey, and India include:

Russia: “Roskomnadzor requested that we block a Russian-language summary of a Financial Times report claiming that the content was “extremist”. The article stated that the real number of coronavirus deaths in Russia is potentially 70% higher than what official statistics report.” – The content wasn’t removed, which was, in part, due to errors in the way the request had been served. This included procedural defects in the way the request was served (Jan-Jun, 2020).

Turkey: “We received a court order to delist 5 URLs from Google Search and to remove 1 Blogger blog post on the basis of “right-to-be-forgotten” legislation, on behalf of a high-ranking official. The news articles reported accusations of organised crime, which allegedly led to a criminal complaint.” – The URLs were not delisted or removed (Jul-Dec, 2020).

Turkey: “We received a court order to remove 2 Google Groups posts, 2 Blogger posts, 1 Blogger image, and an entire Blogger blog publishing political caricatures of a very senior Government official of Turkey.” – The content was not removed (Jul-Dec, 2016).

India: “We received multiple requests from Indian law enforcement for 173 YouTube URLs depicting content related to COVID-19. The reported content ranged from conspiracy theories and religious hate speech related to COVID-19 to news reports and criticism of the authorities’ handling of the pandemic.” 14 URLs were removed for violating YouTube’s community guidelines, 30 URLs were restricted in India based on cited local laws. Further information was requested for 106 URLs, of which 10 URLs were not removed and 13 URLs were already down.

India accounts for 24 percent of Facebook’s content removal requests

Facebook received the largest number of government content requests overall with 308,434 in total. India made up for the vast majority of these, with its 74,674 requests accounting for nearly 25 percent of the total. Most of India’s requests (40 percent) were made in 2015 when 30,126 requests were submitted. Since then, India’s requests have remained much lower, only reaching two or three thousand per year, except for in 2018 when requests spiked again at just over 19,000.

Interestingly, in 2015, the Supreme Court of India struck down section 66A of the Information Technology Act, 2000, which made posting “offensive” comments online a crime that was punishable by jail. Perhaps this led to an influx in offensive comments on mediums like Facebook, or authorities turned to Facebook’s content removal system to try and combat things differently.

In second place for removal requests via Facebook is Mexico with 45,217. Most of these requests (45 percent) were placed in the first half of 2017, shortly after Mexico first started submitting removal requests (its first figures are recorded for the latter part of 2016). Therefore, Mexican officials were perhaps “catching up” on the content that they thought violated local law. Mexico’s removal requests dropped dramatically in 2018 (2,040 submitted in total) before rising in 2019 (by 240 percent to 6,946) and in 2020 (by 93 percent to 13,399).

Mexico was closely followed by France with 43,816 requests. Again, the majority of these requests were submitted years ago (37,695 or 86 percent were submitted in the second half of 2015). But unlike Mexico, France’s requests have continued to decline year on year with just 298 submitted in all of 2020. This dramatic peak in removal requests does coincide with the November 2015 terror attacks in Paris.

Oddly, the US doesn’t feature anywhere near the top for removal requests, ranking 57th for its mere 27 removal requests since reporting began. Facebook’s Transparency Report suggests a country might not make the list either because Facebook’s services aren’t available there or there haven’t been any items of this type to report. The US doesn’t fall into the former, but the latter doesn’t seem likely either, especially when you consider the United States’s removal requests across other platforms. Furthermore, there is a case study (like the ones depicted below) for the US, which suggests:

“We received a request from a county prosecutor’s office to remove a page opposing a county animal control agency, alleging that the page made threatening comments about the director of the agency and violated laws against menacing.” Facebook reviewed the page and found there to be no credible threats so it, therefore, didn’t violate their Community Standards. (Oct 2015)

Examples of Facebook content removal requests

India: “We received a request from law enforcement in India to remove a photo that depicted a sketch of the Prophet Mohammed.” – The content didn’t violate Facebook’s Community Standards but was made unavailable in India where any depiction of Mohammed is forbidden. (Jun 2016)

France: “Following the November 2015 terrorist attacks in Paris, we received a request from L’Office Central de Lutte Contre la Criminalité Liée aux Technologies de l’Information et de la Communication (OCLCTIC), a division of French law enforcement, to remove a number of instances of a photo taken inside the Bataclan concert venue depicting the remains of several victims. The photo was alleged to violate French laws related to protecting human dignity.” – The content didn’t violate Facebook’s Community Standards but 32,100 instances of the photo were restricted in France. It was still available in other countries. (Nov 2015)

Mexico: “We received a request from the Mexican Federal Electoral Court to remove 239 items in connection with two complaints filed by the Partido de la Revolución Democrática (“PRD”) against Governmental Entities in Mexico. The PRD alleged that the content violated Mexico’s election laws.” – The content didn’t violate Facebook’s Community Standards but access to 63 posts were restricted in Mexico as they were deemed unlawful. 159 items were duplicated or had already been removed. (Jan 2020)

Japan accounts for 31 percent of Twitter’s content removal requests Twitter

Japan had the largest number of government content requests on Twitter with 55,590 requests submitted in total. This made up for 31 percent of all of the requests recorded by Twitter. Most of these requests (36,573 or 66 percent) were submitted in 2020. In fact, Japan’s content removal requests to Twitter have increased dramatically in recent years, jumping by 1,916 percent from 2018 to 2019 (from 875 to 17,640) and by 107 percent from 2019 to 2020 (from 17,640 to 36,573).

While the removal requests across Twitter have increased on a yearly basis (worldwide), Japan’s growth exceeds the worldwide average of 97 percent from 2018 to 2019 and 74 percent from 2019 to 2020. This comes amid recent reports that Twitter Japan seems to be suspending government critics. However, Twitter’s official report suggests the majority of the removal requests relate to laws surrounding narcotics and psychotropics, obscenity, or money lending.

In second place was Turkey with 49,525 requests, followed by Russia with 36,787 requests. Although Russia follows Japan’s trend with yearly increases in removal requests (99 percent from 2018 to 2019 and 54 percent from 2019 to 2020), Turkey’s removal requests are in decline (dropping by 20 percent from 2018 to 2019 and by 28 percent from 2019 to 2020).

Examples of Twitter content removal requests

Turkey: “Twitter received a court order from Turkey regarding two Tweets containing insulting language towards a high-level official of a prominent bank in Turkey for violation of personal rights. Twitter withheld both Tweets in Turkey in response to the court order.” (Jul-Dec, 2020)

Russia: “We received the first Periscope removal request from Roskomnadzor concerning a prisoner’s account. Citing Article 82 of the Russian Criminal Executive Code, the reporter asked us to ‘block the account from which the violating broadcast was made’. However, the reported account had no broadcasts, so we did not take any action.” (Jan-Jun 2017)

France: “We withheld one Tweet in response to a legal demand from the Office Central de Lutte contre la Criminalité liée aux Technologies de l’Information et de la Communication (OCLCTIC) for glorification of terrorist attacks.” (Jul-Dec 2017)

China accounts for 52 percent of Microsoft’s content removal requests

As we have already seen, China barely features across all of the aforementioned removal platforms for its content removal requests. This is due to the widespread blocking of these platforms, which removes the need for such requests. However, as some of Microsoft’s products are available in China, it accounts for over half of all the requests submitted to this tech giant.

Unfortunately, Microsoft doesn’t offer any insight into why the content removal requests are submitted. What it does indicate, however, is how many requests result in any action being taken. From July to December 2020, 96 percent of China’s requests were actioned. Russia (the second-highest submitter of requests) had just 41 percent of its requests actioned, while France had 89 percent.

Since the second half of 2018, China has always submitted over 1,000 removal requests every six months to Microsoft. Russia, however, upped its requests significantly in the second half of 2019, submitting nearly 300 percent more than the first half (2,951 compared to 743). But these started to drop off again in 2020, reducing by 45 percent and 58 percent in the first and second half of 2020, respectively.

Content removal requests across other platforms

Google, Facebook, Twitter, and Microsoft account for the vast majority of content removal requests, but the following also show interesting insights into where governments are focusing their online censorship efforts.

Dropbox

Russia submitted 34 percent of all the content removal requests to Dropbox, followed by France with 24 percent and the UK with 21 percent. Russia’s requests peaked in 2017 with 243 of its 752 (32 percent) requests submitted during this time. France’s came in 2018 with 63 percent of its total (331 of 524) submitted then. The UK also submitted the majority of its (41 percent) in 2017.

Since 2017/18, Dropbox’s removal requests have decreased quite significantly, falling by 38 percent from 2018 to 2019 and by 52 percent from 2019 to 2020.

Dropbox doesn’t provide insight into the types of content removal requests that are submitted but does appear to action the majority of requests it receives for most countries. For example, the US submitted 33 requests which affected 45 accounts. All but 2 of these accounts had action taken against them. However, of the 48 requests submitted by Russia in 2020, which affected 13 accounts, only 7 accounts had content blocked on them.

LinkedIn

LinkedIn receives very few content removal requests according to its transparency report and the vast majority of these are submitted by China. 42 out of 50 of the requests in 2020 came from China with only 14 countries having ever submitted one of these reports in the last three years (from 2018 to 2020).

Pinterest

The number of requests submitted to Pinterest has grown significantly within the last two reporting periods, increasing by 500 percent from 2019 to 2020 (from 680 to 4,078). South Korea and Russia account for the majority of these requests, submitting 46 and 43 percent of the total requests respectively.

Most of South Korea’s requests (99 percent) came in 2020 while Russia has been upping its requests since 2018. Russia submitted 102 in 2018, increasing by 376 percent to 486 in 2019 before rising by a further 234 percent to 1,622 in 2020.

Most of the content removal requests submitted to Pinterest are due to violations of community guidelines. For example, in 2020, 90 percent of the requests submitted were due to content that violated Pinterest’s community guidelines. No specific examples are available.

Reddit

Even though government content removal requests for Reddit have increased in recent years, the numbers are still within the low hundreds. Furthermore, as Reddit’s report demonstrates, a lot of the content that is restricted due to these requests is done so in the local area (over 71 percent of the pieces of content flagged by government requests in 2020 was only restricted in the local area).

Russia is, again, the main culprit for these requests, submitting over 29 percent of all the requests. Turkey has submitted the second-highest number of requests (100 or 20 percent) but most of these came in 2018 and 2019. In 2020, South Korea upped its requests with 60 in total (it only submitted 1 in 2019 and none before that).

No further information on the type of requests is available.

Tumblr

Data is only available from mid-2019 for Tumblr so it’s hard to conduct real comparisons on how things have changed on a year-by-year basis here. However, from the second half of 2019 to the first half of 2020, requests jumped by 229 percent (from 224 to 738) before rising by another 202 percent in the second half of 2020 (from 738 to 2,231).

South Korea dominates the requests submitted to this platform, accounting for 71 percent of all requests ever submitted. According to Tumblr’s report, 96 percent of the requests submitted by South Korea in 2020 resulted in data being removed–the global average was 95 percent.

No further details on the requests are available.

TikTok

The number of requests submitted to TikTok has been steadily increasing in recent years. Most of these have come from Russia (24 percent), India (15 percent), and Pakistan (16 percent). While India and Pakistan submitted requests in 2019 and 2020, all of Russia’s requests came in 2020 alone.

TikTok doesn’t provide an insight into the reason for the content removal requests but does give figures for how much content is affected by the requests. Pakistan’s 97 removal requests in the second half of 2020 saw the greatest amount of content affected with 14,263 pieces implicated in total. In contrast, Russia’s 135 requests implicated 429 pieces of content.

Wikimedia

From 2018 to 2019, Wikimedia’s content removal requests dropped by 35 percent (from 880 to 573), before rising again by 29 percent (from 573 to 741) from 2019 to 2020.

The United States accounts for the greatest chunk of these requests (across all years), accounting for 23 percent in total. However, the US’s requests have decreased in recent years.

What is particularly interesting about these Wikimedia content removal requests is that they are hardly ever actioned. According to the reports, only 2 of the 380 requests submitted in the second half of 2020 were actioned. Before that, the only content removal request accepted was from Ukraine in 2014. A blogger included a photo of his visa to visit Burma/Myanmar on his website. He had scrubbed his personal details from the image. The same picture later appeared on English Wikipedia in an article about the country’s visa policy. The redactions were removed and his information exposed. Given the nature of the information and the circumstances of how it was exposed, Wikimedia granted the takedown request.

Methodology

Our team extracted the data from the transparency reports for Twitter, Facebook, Microsoft, Wikimedia, Pinterest, Dropbox, Reddit, LinkedIn, TikTok, and Tumblr. We analyzed the data by country and year, while also noting any other significant details where available.

In Facebook’s latest report for the second half of 2020, every country was listed as having at least 12 removal requests. Due to the volume of countries with a 12, this appeared to be a glitch in the report as the majority of countries normally had 0. Therefore, we omitted the ones with 12 and replaced them with a 0 to avoid over-exaggerating the number of requests received.

When creating a ratio of content removal requests to internet users, we omitted two countries from the top 10–Tokelau and Cook Islands. This is due to them having 1 and 6 content removal requests in total but, because of their low populations, they were classed as having a high rate of requests per 100,000 users, which would be an unfair representation.

Sources

https://transparencyreport.google.com/government-removals/by-country?hl=en

https://transparency.twitter.com/en/removal-requests.html

https://www.microsoft.com/en-us/corporate-responsibility/crrr

https://govtrequests.facebook.com/content-restrictions

https://transparency.wikimedia.org/content.html

https://www.dropbox.com/transparency/reports

https://about.linkedin.com/transparency/government-requests-report

https://policy.pinterest.com/en/transparency-report

https://www.redditinc.com/policies/transparency-report-2020

https://www.tumblr.com/transparency

https://www.tiktok.com/safety/resources/transparency-report-2020-2