A dramatic appeal hopes to ensure the survival of the nonprofit Internet Archive. The signatories of a petition, which is now open for further signatures, are demanding that the US recording industry association RIAA and participating labels such as as Universal Music Group (UMG), Capitol Records, Sony Music, and Arista drop their lawsuit against the online library. The legal dispute, pending since mid-2023 and expanded in March, centers on the “Great 78” project. This project aims to save 500,000 song recordings by digitizing 250,000 records from the period 1880 to 1960. Various institutions and collectors have donated the records, which are made for 78 revolutions per minute (“shellac”), so that the Internet Archive can put this cultural treasure online.

The music companies originally demanded Ã…372 million for the online publication of the songs and the associated “mass theft .” They recently increased their demand to Ã…700 million for potential copyright infringement. The basis for the lawsuit is the Music Modernization Act, which US President Donald Trump approved in 2018. This includes the CLASSICS Act. This law retroactively introduces federal copyright protection for sound recordings made before 1972, which until the were protected in the US by different state laws. The monopoly rights now apply US-wide for a good 100 years (for recordings made before 1946) or until 2067 (for recordings made between 1947 and 1972).

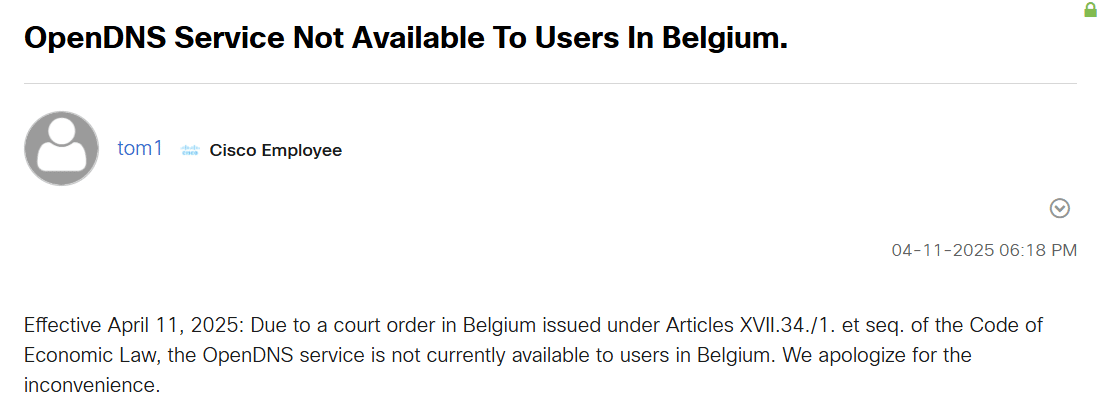

Critical Internet infrastructure is at stake

The lawsuit ultimately threatens the existence of the entire Internet Archive , including the wavy-known Wayback Machine , they say. This important public service is used by millions of people every day to access historical “snapshots” from the web. Journalists, educators, researchers, lawyers, and citizens use it to verify sources, investigate disinformation, and maintain public accountability. The legal attack also puts a “critical infrastructure of the internet” at risk. And this at a time when digital information is being deleted, overwritten, and destroyed: “We cannot afford to lose the tools that preserve memory and defend facts.” The Internet Archive was forced to delete 500,000 books as recently as 2024. It also continually struggles with IT attacks .

The case is called Universal Music Group et al. v. Internet Archive. The lawsuit was originally filed in the U.S. District Court for the Southern District of New York (Case No. 1:23-cv-07133), but is now pending in the U.S. District Court for the Northern District of California (Case No. 3:23-cv-6522). The Internet Archive takes the position that the Great 78 project does not harm the music industry. Quite the opposite: Anyone who wants to enjoy music uses commercial streaming services anyway; the old 78 rpm shellac recordings are study material for researchers.

Original page here: https://www.heise.de/news/Klage-von-Plattenlabels-Petition-zur-Rettung-des-Internet-Archive-10358777.html

How can copyright law be so incredibly wrong all the time?!