Tesco has shuttered its parking validation web app after The Register uncovered tens of millions of unsecured ANPR images sitting in a Microsoft Azure blob.

The images consisted of photos of cars taken as they entered and left 19 Tesco car parks spread across Britain. Visible and highlighted were the cars’ numberplates, though drivers were not visible in the low-res images seen by The Register.

Used to power the supermarket’s outsourced parkshopreg.co.uk website, the Azure blob had no login or authentication controls. Tesco admitted to The Register that “tens of millions” of timestamped images were stored on it, adding that the images had been left exposed after a data migration exercise.

Ranger Services, which operated the Azure blob and the parkshopreg.co.uk web app, said it had nothing to add and did not answer any questions put to it by The Register. We understand that they are still investigating the extent of the breach. The firm recently merged with rival parking operator CP Plus and renamed itself GroupNexus.

[…]

The Tesco car parks affected by the breach include Braintree, Chelmsford, Chester, Epping, Fareham, Faversham, Gateshead, Hailsham, Hereford, Hove, Hull, Kidderminster, Woolwich, Rotherham, Sale (Cheshire), Slough, Stevenage, Truro, Walsall and Weston-super-Mare.

The web app compared the store-generated code with the ANPR images to decide whom to issue with parking charges. Ranger Services has pulled parkshopreg.co.uk offline, with its homepage now defaulting to a 403 error page.

[…]

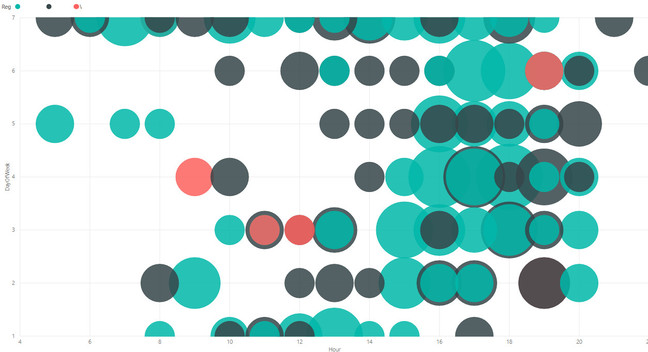

A malicious person could use the data in the images to create graphs showing the most likely times for a vehicle of interest to be parked at one of the affected Tesco shops.

This was what Reg reader Ross was able to do after he realised just how insecure the database behind the parking validation app was.

Frequency of parking for three vehicles at Tesco in Faversham. Each colour represents one vehicle; the size of the circle shows how frequently they parked at the given time. Click to embiggen

A Tesco spokesman told The Register: “A technical issue with a parking app meant that for a short period historic images and times of cars entering and exiting our car parks were accessible. Whilst no images of people, nor any sensitive data were available, any security breach is unacceptable and we have now disabled the app as we work with our service provider to ensure it doesn’t happen again.”

We are told that during a planned data migration exercise to an AWS data lake, access to the Azure blob was opened to aid with the process. While it has been shut off, Tesco hasn’t told us how long it was left open for.

Tesco said that because it bought the car park monitoring services in from a third party, the third party was responsible for protecting the data in law. Ranger Services had not responded to The Register’s questions about whether it had informed the Information Commissioner’s Office by the time of writing.

[…]

As part of our investigation into the Tesco breach we also found exposed data in an unsecured AWS bucket belonging to car park operator NCP. The data was powering an online dashboard that could also be accessed without any login creds at all. A few tens of thousands of images were exposed in that bucket.

[…]

The unsecured NCP Vizuul dashboard

The dashboard, hosted at Vizuul.com, allowed the casual browser to pore through aggregated information drawn from ANPR cameras at an unidentified location. The information on display allowed one to view how many times a particular numberplate had infringed the car park rules, how many times it has been flagged in particular car parks, and how many penalty charge notices had been issued to it in the past.

The dashboard has since been pulled from public view.