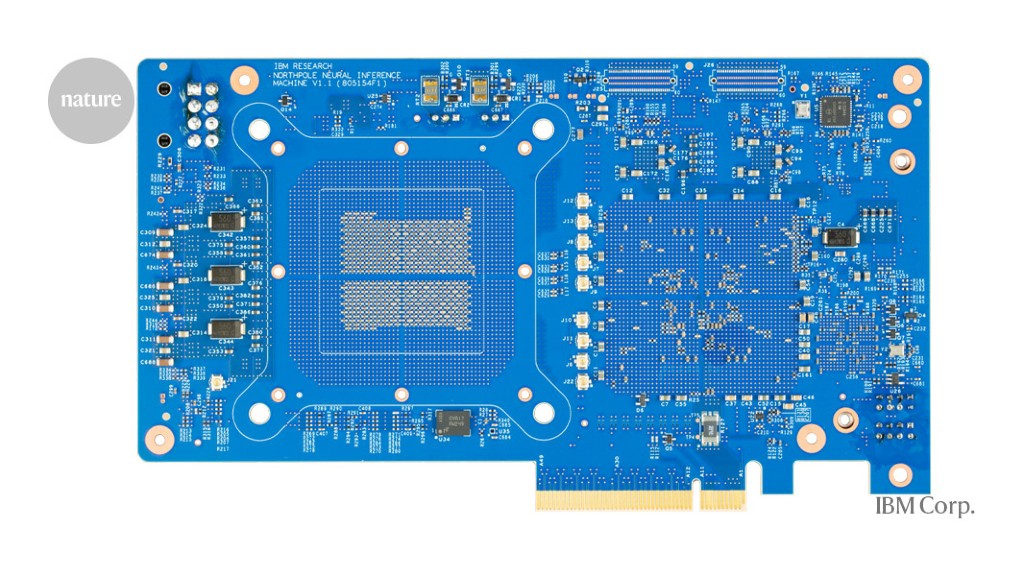

Their massive NorthPole processor chip eliminates the need to frequently access external memory, and so performs tasks such as image recognition faster than existing architectures do — while consuming vastly less power.

“Its energy efficiency is just mind-blowing,” says Damien Querlioz, a nanoelectronics researcher at the University of Paris-Saclay in Palaiseau. The work, published in Science1, shows that computing and memory can be integrated on a large scale, he says. “I feel the paper will shake the common thinking in computer architecture.”

NorthPole runs neural networks: multi-layered arrays of simple computational units programmed to recognize patterns in data. A bottom layer takes in data, such as the pixels in an image; each successive layer detects patterns of increasing complexity and passes information on to the next layer. The top layer produces an output that, for example, can express how likely an image is to contain a cat, a car or other objects.

[…]

NorthPole is made of 256 computing units, or cores, each of which contains its own memory. “You’re mitigating the Von Neumann bottleneck within a core,” says Modha, who is IBM’s chief scientist for brain-inspired computing at the company’s Almaden research centre in San Jose.

The cores are wired together in a network inspired by the white-matter connections between parts of the human cerebral cortex, Modha says. This and other design principles — most of which existed before but had never been combined in one chip — enable NorthPole to beat existing AI machines by a substantial margin in standard benchmark tests of image recognition. It also uses one-fifth of the energy of state-of-the-art AI chips, despite not using the most recent and most miniaturized manufacturing processes. If the NorthPole design were implemented with the most up-to-date manufacturing process, its efficiency would be 25 times better than that of current designs, the authors estimate.

[…]

NorthPole brings memory units as physically close as possible to the computing elements in the core. Elsewhere, researchers have been developing more-radical innovations using new materials and manufacturing processes. These enable the memory units themselves to perform calculations, which in principle could boost both speed and efficiency even further.

Another chip, described last month2, does in-memory calculations using memristors, circuit elements able to switch between being a resistor and a conductor. “Both approaches, IBM’s and ours, hold promise in mitigating latency and reducing the energy costs associated with data transfers,”

[…]

Another approach, developed by several teams — including one at a separate IBM lab in Zurich, Switzerland3 — stores information by changing a circuit element’s crystal structure. It remains to be seen whether these newer approaches can be scaled up economically.

Source: ‘Mind-blowing’ IBM chip speeds up AI

Robin Edgar

Organisational Structures | Technology and Science | Military, IT and Lifestyle consultancy | Social, Broadcast & Cross Media | Flying aircraft